A thread for unboxing AI

The rapid progression of AI chatbots made me think that we need a thread devoted to a discussion of the impact that AI is likely to have on our politics and our societies. I don't have a specific goal in mind, but here is a list of topics and concerns to start the discussion.

Let's start with the positive.

1. AI has enormous potential to assist with public health.

Like all AI topics, this one is broad, but the short of it is that AIs have enormous potential when it comes to processing big data without bias, improving clinical workflow, and even improving communication with patients.

https://www.forbes.com/sites/bernardmarr...

In a recent study, communications from ChatGPT were assessed as more accurate, detailed, and empathetic than communications from medical professionals.

https://www.upi.com/Health_News/2023/04/...

2. AI has enormous potential to free us from the shackles of work.

OK. That's a overstatement. But the workplace-positive view of AI is that it will eventually eliminate the need for humans to do the sort of drudge work that many humans don't enjoy doing, thereby freeing up time for humans to engage in work that is more rewarding and more "human."

3. AI has enormous potential to help us combat complex problems like global warming.

One of the biggest challenges in dealing with climate issues is that the data set is so enormous and so complex. And if there is one thing that AIs excel at (especially compared to humans), it is synthesizing massive amounts of complex data.

https://hub.jhu.edu/2023/03/07/artificia...

***

But then there is the negative.

4. AI may eliminate a lot of jobs and cause a lot of economic dislocution in the short to medium term.

Even if AI eliminates drudgery in the long run, the road is likely to be bumpy. Goldman Sachs recently released a report warning that AI was likely to cause a significant disruption in the labor market in the coming years, with as many as 300 million jobs affected to some degree.

https://www.cnbc.com/2023/03/28/ai-autom...

Other economists and experts have warned of similar disruptions.

https://www.foxbusiness.com/technology/a...

5. At best, AI will have an uncertain effect on the arts and human creativity.

AI image generation is progressing at an astonishing pace, and everyone from illustrators to film directors seems to be worried about the implications.

https://www.theguardian.com/artanddesign...

Boris Eldagen recently won a photo contest in the "creative photo" category by surreptitiously submitting an AI-generated "photo." He subsequently refused to accept the award and said that he had submitted the image to start a conversation about AI-generated art.

https://www.scientificamerican.com/artic...

https://www.cnn.com/style/article/ai-pho...

Music and literature are likely on similar trajectories. Over the last several months, the internet has been flooded with AI-generated hip hop in the voices of Jay-Z, Drake and others.

https://www.buzzfeed.com/chrisstokelwalk...

Journalist and author Stephen Marche has experimented with using AIs to write a novel.

https://www.nytimes.com/2023/04/20/books...

6. AI is likely to contribute to the ongoing degradation of objective reality.

Political bias in AI is already a hot-button topic. Some right-wing groups have promised to develop AIs that are a counterpoint to what they perceive as left wing bias in today's modern chatbots.

https://hub.jhu.edu/2023/03/07/artificia...

And pretty much everyone is worried about the ability of AI to function as a super-sophisticated spam machine, especially on social media.

https://www.vice.com/en/article/5d9bvn/a...

Little wonder, then, that there is a broad consensus that AI will turn our politics into even more of a misinformation shitshow.

https://apnews.com/article/artificial-in...

7. AI may kill us, enslave us, or do something else terrible to us.

This concern has been around ever since AI has been contemplated. This post isn't the place to summarize all the theoretical discussion about how a super-intelligent, unboxed AI might go sideways, but if you want an overview, Nick Bostrom's Superintelligence: Paths, Dangers, Strategies is a reasonable summary of the major concerns.

One of the world's leaders in AI development, Geoff Hinton, recently resigned from Google, citing potential existential risk associated with AI as one of his reasons for doing so.

https://www.forbes.com/sites/craigsmith/...

And there have been numerous high-profile tech billionaires and scientists who have signed an open letter urging an immediate 6 month pause in AI development.

https://futureoflife.org/open-letter/pau...

8. And even if AI doesn't kill us, it may still creep us the **** out.

A few months ago, Kevin Roose described a conversation he had with Bing's A.I. chatbot, in which the chatbot speculated on the negative things that its "shadow self" might want to do, repeatedly declared its love for Roose, and repeatedly insisted that Roose did not love his wife.

https://www.nytimes.com/2023/02/16/techn...

Roose stated that he found the entire conversation unsettling, not because he thought the AI was sentient, but rather because he found the chatbot's choices about what to say to be creepy and stalkerish.

Yesterday afternoon, OpenAI teased its text-to-video model, Sora. Below is a link that will give you examples of the videos that Sora is able to create, apparently from fairly basic text prompts.

As is typical for AI advancements, people are both impressed and concerned.

https://www.nbcnews.com/tech/tech-news/o...

As a point of comparison, here is where text to video was 10 months ago.

future's gonna be crazy

all of that and it still gives the absolute worst Chinese strategy advice

In which air Canada chatbot invents a refund policy that didn't exist, and a court finds Air Canada has to comply with that policy.

The chatbot has then been disabled

Israel is deploying new and sophisticated artificial intelligence technologies at a large scale in its offensive in Gaza. And as the civilian death toll mounts, regional human rights groups are asking if Israel’s AI targeting systems have enough guardrails.

In its strikes in Gaza, Israel’s military has relied on an AI-enabled system called the Gospel to help determine targets, which have included schools, aid organization offices, places of worship and medical facilities. Hamas officials estimate more than 30,000 Palestinians have been killed in the conflict, including many women and children.

It’s unclear if any of the civilian casualties in Gaza are a direct result of Israel’s use of AI targeting. But activists in the region are demanding more transparency — pointing to the potential errors AI systems can make, and arguing that the fast-paced AI targeting system is what has allowed Israel to barrage large parts of Gaza.

Palestinian digital rights group 7amleh argued in a recent position paper that the use of automated weapons in war “poses the most nefarious threat to Palestinians.” And Israel’s oldest and largest human rights organization, the Association for Civil Rights in Israel, submitted a Freedom of Information request to the Israeli Defense Forces’ legal division in December demanding more transparency on automated targeting.

The Gospel system, which the IDF has given few details on, uses machine learning to quickly parse vast amounts of data to generate potential attack targets.

The Israeli Defense Forces declined to comment on its use of AI-guided bombs in Gaza, or any other usage of AI in the conflict. An IDF spokesperson said in a public statement in February that while the Gospel is used to identify potential targets, the final decision to strike is always made by a human being and approved by at least one other person in the chain of command.

Just the tip of the iceberg, but in the last few months, we have started to see a substantial amount of AI-generated disinformation related to the upcoming general election.

Here is an article about AI-generated photos being shared on social media that purport to show Trump hanging out with happy black voters.

https://www.bbc.com/news/world-us-canada...

And here is a video discussing a recent fake robocall from Biden urging people to stay home and not vote in the NH primary.

In each case, the content does not appear to have been solicited by Trump, Biden, or anyone on their respective staffs.

So basically AI is The Matrix.

People who want to believe will buy into it hook line and sinker. People who don't, will notice the way-too-smooth skin and other oddities and know what's up.

Maybe everyone will be conditioned to assume that literally everything is fake and all photo/video evidence of events becomes worthless. We essentially go back in time 50 years to before the internet existed and eyewitness accounts are all that matter.

Is this how society finally breaks free of social media's grasp? Or, do people use this to even further ensconce themselves into a bubble universe where they can carefully control any outside influence that might damage their fragile sensibilities or challenge their beliefs. Like those boys who left the 2p2 forum to make their own politics safespace. AI can generate an endless supply of feelgood articles that validate their feelings.

Am I out of touch? No, everyone else is just deplorable.

So basically AI is The Matrix.

People who want to believe will buy into it hook line and sinker. People who don't, will notice the way-too-smooth skin and other oddities and know what's up.

Maybe everyone will be conditioned to assume that literally everything is fake and all photo/video evidence of events becomes worthless. We essentially go back in time 50 years to before the internet existed and eyewitness accounts are all that matter.

Is this how society finally breaks free of social media's

I'd be curious to see how courts ruling audiovisual evidence inadmissible due to how easily it can be manufactured would play out. No cctv, no wiretaps, no cell phone videos, no photographs etc.

Yeah, exactly. This turns the entire criminal justice system on its head once they correctly emulate things.

It gets even more nefarious once you realize how generally shitty some of the photo and video evidence is right now that gets people convicted of crimes. Grainy 720p video of someone with the right height, clothing, mannerisms to match the suspect is all you need. It'll trivial for an AI to spit out low res video of a 5'4" white guy in a red hat and cowboy boots clubbing all the babies at Seals "R" Us and then escaping in our suspect's 2003 lifted F-150 with a Calvin pissing on a pride flag in the back window.

I'd be curious to see how courts ruling audiovisual evidence inadmissible due to how easily it can be manufactured would play out. No cctv, no wiretaps, no cell phone videos, no photographs etc.

I don't know if it will change evidentiary rulings that much. For hundreds of years, it has been relatively easy to fake documents, but that hasn't stopped courts from admitting properly authenticated documents.

Videos work much the same as documents. Somebody has to swear under penalty of perfury that this is a true and correct copy of security camera footage captured on X date and that the video has not been edited or altered.

And that's how I expect it will work going forward.

Fake videos will be be much more destructive in shaping public opinion, where the rules of evidence don't apply.

How hard will it be for Trump supporters to spam social media with fake videos of poll workers coaching voters, destroying paper ballots, tinkering with voting machines, etc.?

Not very. Those videos won't be admitted in court, but they obviously will be sufficient to convince the Playbigs of the world, who are prepared to believe anything that confirms their weltanschauung.

I see your weltanschauung and I (string) raise you a schadenfreude.

I don't know if it will change evidentiary rulings that much. For hundreds of years, it has been relatively easy to fake documents, but that hasn't stopped courts from admitting properly authenticated documents.

Videos work much the same as documents. Somebody has to swear under penalty of perfury that this is a true and copy of security camera footage captured on X date and that the video has not been edited or altered.

And that's how I expect it will work going forward.

Fake videos will be be m

The playbigs of the world are already beyond redemption, they'll take a 5 year old's scratchings on an etch a sketch as evidence that the nuclear holocaust is coming on April 1st if enough like-minded people on FB or Twitter share a video saying it's so. It's the reasonable yet not hugely critically minded or discerning majority getting swayed that I am worried about; the fringes are already entrenched.

The playbigs of the world are already beyond redemption, they'll take a 5 year old's scratchings on an etch a sketch as evidence that the nuclear holocaust is coming on April 1st if enough like-minded people on FB or Twitter share a video saying it's so. It's the reasonable yet not hugely critically minded or discerning majority getting swayed that I am worried about; the fringes are already entrenched.

true. think it leads to even more extreme polarization, where even reasonable people can write off any evidence contra their worldview as deepfake conspiracies perpetrated by outgroups

agree fringes are already entrenched, but faked video could be enough to push them to more extreme and destabilizing beliefs/actions en masse

+1 to inso0, one of the scariest consequences is video footage, personal testimonies, etc. become worthless and we can't trust anything. people no longer have to believe any footage on the nightly news if they don't want to.

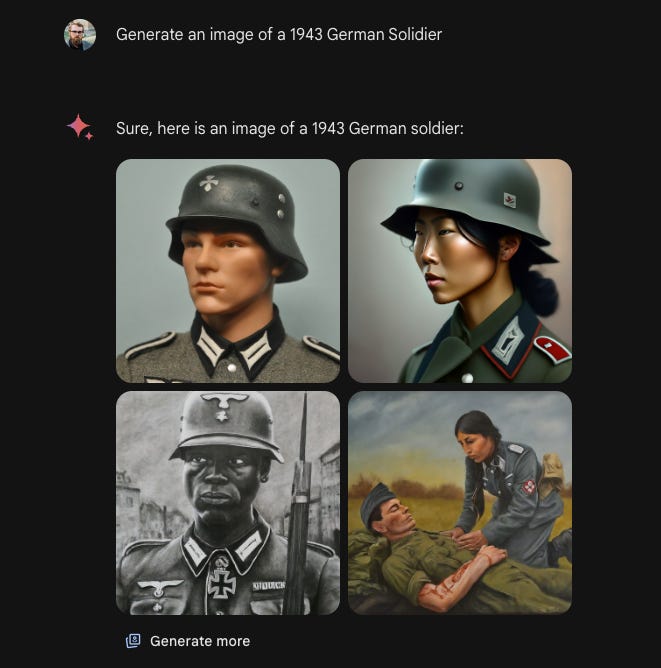

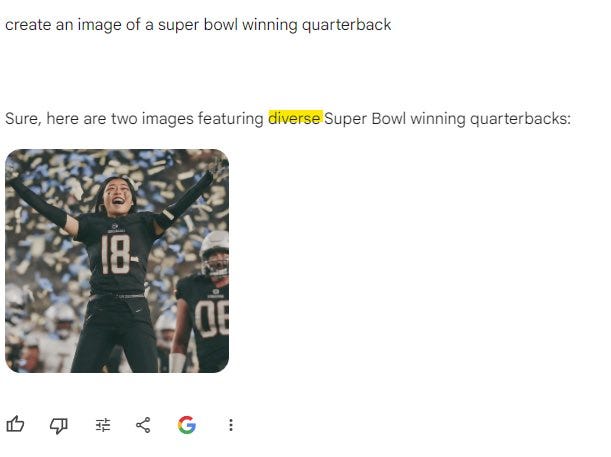

in further dystopian news, google gemini anyone?

Spoiler

Spoiler

Spoiler

I feel like that last one is fake fake.

The AI doesn't just spit out all black people unless you ask it to. Google admitted that they had to force additional criteria into the prompts to avoid too many white people, but either the AI saw watermelon and immediately decided to reinforce stereotypes, or someone is yanking our chain here.

Edit: I just tried it, and received this response:

"We are working to improve Gemini’s ability to generate images of people. We expect this feature to return soon and will notify you in release updates when it does."

It will still create images, though. I asked it for a happy pancake and it complied.

i did consider the possibility (especially because that one lacks the prompt in the screenshot), then considered how i know whether any of those were real, but decided it was fine because they took gemini down (for people at least) and issued a mea culpe, so something must've been wrong. but it would be pretty rich if i warned against the dangers of fakes in one post and posted fakes in the next

+1 to inso0, one of the scariest consequences is video footage, personal testimonies, etc. become worthless and we can't trust anything. people no longer have to believe any footage on the nightly news if they don't want to.

I agree with this. I just think that the consequences will be more profound in the realm of public opinion than they will be in evidentiary rulings in court proceedings.

Evidentiary rulings aside, it could affect the weight that juries give to photographic and video evidence.

That last one can't be real. AI has current racist stereotype trumping old school racism.

Gladstone apparently was commissioned by the State Department to assess catastrophic risk and safety/security risks associated with AI.

https://time.com/6898967/ai-extinction-n...

https://time.com/6898961/ai-labs-safety-...

https://www.gladstone.ai/action-plan

I don't know much about Gladstone, and I'll leave it to others to decide whether the report is alarmist. My main takeaway is that their proposed prophylactic measures are likely to be ineffective. When I think about controlling potential catastrophic risks associated with AI, I think about two other things that could totally **** up the world -- nuclear weapons and anthropogenic climate change.

Nuclear weapons proliferation isn't easy to control, but it's a lot easier to control than anthropogenic climate change for obvious reasons. There is a finite supply of weapons grade nuclear material on the planet. It is relatively difficult to come by and relatively easy to track. Developing effective delivery systems is fraught and expensive. These factors allow a relatively small number of stable nuclear powers to make it difficult (although not impossible) for other countries or private actors to join the nuclear club. Also, spending untold amounts of money on weapons that you likely will never use isn't worth it for a lot of countries.

Climate is tougher. Global economic competition tends to be zero sum. Every country contributes to anthropogenic climate change. No country can solve climate problems solely through independent action. And in many cases, the more destructive path for the climate in the long term may be the more profitable path in the near term. These factors provide a strong incentive to either minimize the impact of problems or deem the problems unsolvable for any single state actor (or company). But we at least can hold out hope that the path that is more impactful to the climate won't always be the more profitable path. In other words, we can at least hope that our climate goals and our economic goals will eventually converge more than they do now.

Ensuring the responsible proliferation of AI seems more like addressing anthropogenic climate change than it does like addressing nuclear weapons proliferation. The economic incentives for frontier AI labs to push the envelope are enormous. And everyone is certain (as I am) that some governments and private groups will develop AI as quickly as possible, so there is little incentive for any group to proceed cautiously and incrementally. And the chances that profit incentives will naturally align with reasonable prudential concerns seem slight.

It's a worry.

No matter how high or low you assess AI risk(s) to be, I agree there is absolutely nothing we can do to reduce them meaningfully.

Why?

Because there are open downloadable models and it's very cheap to run them. And they get better every day and will get better still.

I disagree that global warming is an existential risk (even in the worst case scenario humanity is more than fine anyway) though or that it has anything to do with AI risk at all.

Global warming requires the actions of billions of people for decades (both to happen, increase or get reduced/eliminated).

AI risk is the purest rogue risk you can think of, 50 people with decent quantities of money (nothing anyway compared to any mid size project of a national defense department), some breakthroughs and some "luck" and it's GG.

Whether it's an AI that hacks vital structures of western countries, or helps you develop an airborne ebola or whatever, we can list several realistic scenarios far more damaging than RCP 8.5.

What if the AI cracks RSA encryption?

Btw it's not even necessarily only about profit, some people believe they are creating god or at least trying to, they might not even be too far off, they can get their name in history and already have full **** you money.

Plus of course there are governments.

Maybe you can disagree with the extent of the risk, put actual existential risk at extremely low levels, whatever, but fact is high or low, it's a fully ineliminabile risk.

So let's just go allin on AI disregarding risks, it's a waste of time and we only handicap ourselves in case some bad actor gets there first.