A thread for unboxing AI

The rapid progression of AI chatbots made me think that we need a thread devoted to a discussion of the impact that AI is likely to have on our politics and our societies. I don't have a specific goal in mind, but here is a list of topics and concerns to start the discussion.

Let's start with the positive.

1. AI has enormous potential to assist with public health.

Like all AI topics, this one is broad, but the short of it is that AIs have enormous potential when it comes to processing big data without bias, improving clinical workflow, and even improving communication with patients.

https://www.forbes.com/sites/bernardmarr...

In a recent study, communications from ChatGPT were assessed as more accurate, detailed, and empathetic than communications from medical professionals.

https://www.upi.com/Health_News/2023/04/...

2. AI has enormous potential to free us from the shackles of work.

OK. That's a overstatement. But the workplace-positive view of AI is that it will eventually eliminate the need for humans to do the sort of drudge work that many humans don't enjoy doing, thereby freeing up time for humans to engage in work that is more rewarding and more "human."

3. AI has enormous potential to help us combat complex problems like global warming.

One of the biggest challenges in dealing with climate issues is that the data set is so enormous and so complex. And if there is one thing that AIs excel at (especially compared to humans), it is synthesizing massive amounts of complex data.

https://hub.jhu.edu/2023/03/07/artificia...

***

But then there is the negative.

4. AI may eliminate a lot of jobs and cause a lot of economic dislocution in the short to medium term.

Even if AI eliminates drudgery in the long run, the road is likely to be bumpy. Goldman Sachs recently released a report warning that AI was likely to cause a significant disruption in the labor market in the coming years, with as many as 300 million jobs affected to some degree.

https://www.cnbc.com/2023/03/28/ai-autom...

Other economists and experts have warned of similar disruptions.

https://www.foxbusiness.com/technology/a...

5. At best, AI will have an uncertain effect on the arts and human creativity.

AI image generation is progressing at an astonishing pace, and everyone from illustrators to film directors seems to be worried about the implications.

https://www.theguardian.com/artanddesign...

Boris Eldagen recently won a photo contest in the "creative photo" category by surreptitiously submitting an AI-generated "photo." He subsequently refused to accept the award and said that he had submitted the image to start a conversation about AI-generated art.

https://www.scientificamerican.com/artic...

https://www.cnn.com/style/article/ai-pho...

Music and literature are likely on similar trajectories. Over the last several months, the internet has been flooded with AI-generated hip hop in the voices of Jay-Z, Drake and others.

https://www.buzzfeed.com/chrisstokelwalk...

Journalist and author Stephen Marche has experimented with using AIs to write a novel.

https://www.nytimes.com/2023/04/20/books...

6. AI is likely to contribute to the ongoing degradation of objective reality.

Political bias in AI is already a hot-button topic. Some right-wing groups have promised to develop AIs that are a counterpoint to what they perceive as left wing bias in today's modern chatbots.

https://hub.jhu.edu/2023/03/07/artificia...

And pretty much everyone is worried about the ability of AI to function as a super-sophisticated spam machine, especially on social media.

https://www.vice.com/en/article/5d9bvn/a...

Little wonder, then, that there is a broad consensus that AI will turn our politics into even more of a misinformation shitshow.

https://apnews.com/article/artificial-in...

7. AI may kill us, enslave us, or do something else terrible to us.

This concern has been around ever since AI has been contemplated. This post isn't the place to summarize all the theoretical discussion about how a super-intelligent, unboxed AI might go sideways, but if you want an overview, Nick Bostrom's Superintelligence: Paths, Dangers, Strategies is a reasonable summary of the major concerns.

One of the world's leaders in AI development, Geoff Hinton, recently resigned from Google, citing potential existential risk associated with AI as one of his reasons for doing so.

https://www.forbes.com/sites/craigsmith/...

And there have been numerous high-profile tech billionaires and scientists who have signed an open letter urging an immediate 6 month pause in AI development.

https://futureoflife.org/open-letter/pau...

8. And even if AI doesn't kill us, it may still creep us the **** out.

A few months ago, Kevin Roose described a conversation he had with Bing's A.I. chatbot, in which the chatbot speculated on the negative things that its "shadow self" might want to do, repeatedly declared its love for Roose, and repeatedly insisted that Roose did not love his wife.

https://www.nytimes.com/2023/02/16/techn...

Roose stated that he found the entire conversation unsettling, not because he thought the AI was sentient, but rather because he found the chatbot's choices about what to say to be creepy and stalkerish.

Not sure how much smaller than 2 nanometers you think we can make transistors using silicon, but the answer is not very.

Many computational tasks cannot be multi-threaded, so our current strategy of just stacking several extra layers on the die won't really help.

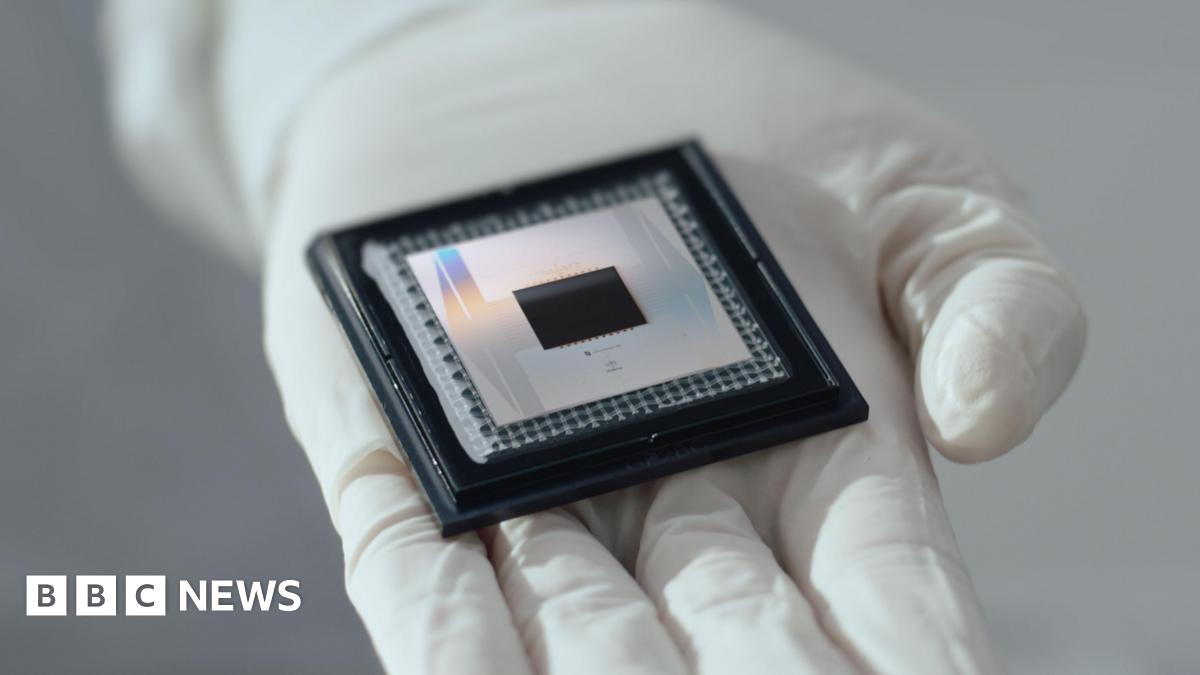

It's the time scales rococo is talking about of 30-50 years combined with the idea that silicon was the constraint that triggered my amusement centers given that just a few weeks ago we had thiis headline.

Google has unveiled a new chip which it claims takes five minutes to solve a problem that would currently take the world's fastest super computers ten septillion – or 10,000,000,000,000,000,000,000,000 years – to complete.

If we were talking 5-10 years or even 10-20 years then we may be relying on various techniques to get our moores law type speed increased but 30-50 years? It ain't going to be limited by silicon. The chances of qbits not having moved solidly into the realm of general purpose computation (or even simulations) in 30-50 years is just so minimal. Unless we consider effective extinction which is far more likely than silicon limiting us if we soldier on.

Something I wrote a few years ago:

There's been a lot of reporting and discussions lately around artificial intelligence (AI) and it's potential dangers and downfalls, but one aspect that almost never gets brought up is how it's going to affect creativity and art. AI is going to be used to generate music, movies, books and visual art.

I was thirteen when Green Day's video for the song "Longview" first played on MTV, and man, it was like nothing I'd heard before. It instantly connected with me, and I went out and bought the CD a few days later. I got a guitar tablature book for the album at Guitar Center, learned every song and used to play along to them on my boombox with my black Epiphone Stratocaster. It wasn't long before I picked up Green Day's first two CDs, 1039 Smoothed Out Slappy Hours and Kerplunk.

The singer and songwriter, Billie Joe Armstrong, was 18 and 19, respectively, when those albums were released, but some of the songs dated back even further. The music I was experiencing came directly from the heart and soul of a really talented kid who was only a few years older than I was at the time of my discovery. These early songs weren't on some next level lyrically, but they were very real and exactly what I needed at the time—right from his experiences and whatever influences he drew inspiration from. Here's an example from their song "Dry Ice":

Oh I love her

Keep dreaming of her

Will I understand

If she wants to be my friend?

I'll send a letter to that girl

Asking her to be my own

But my pen is writing wrong

So I'll say it in a song

As a thirteen year old kid who liked girls but had no clue how to talk to them, this was the best. I could easily make sense of the lyrics, and they spoke even deeper because I was relating to another person—whether or not I was aware of it at the time, though I believe I was. It let me know that I wasn't alone and there were other people out there who felt he same way.

With AI, I have to question whether or not these types of songs will still widely exist and whether or not people will be as likely to form that same type of psychical connection with artists. I think with the latter, it's clear that a lot of that will be lost based on what I'm about to get into. As to whether or not these kinds of songs will still exist, what's to stop a developing songwriter from using a program to write lyrics that are well beyond their capabilities and not really real at all?

That isn't even taking into account the music itself. Of course, people will still play guitars, but when someone prompts an AI program to, let's say, write a three minute song, in the style of Green Day, about a girl, with references to Phoebe Cates, it will spit something out. The artist can then play around with that until they get what they like, but no matter how many prompts they enter in or how many times they change it, it won't truly be coming from them. The information used to create a song like this would be sourced from a giant sea of information on the internet, mashed up and interpreted based on how the programmers trained it—or how it trained itself—and then do it's best to give the artist what they were looking for.

Information on the internet isn't the same as what happens inside the human mind. Just think of your social media profiles. Are those accurate representations you? They're just surface images and only a fraction of you. They don't see inside. This is true for every piece of written language. The internet only has access to what's written down but not everything that people think and feel (no matter how much it analyzes them), and that's where art is born. Even if some kid wants to write a simple song, it will still be missing a lot of authentic characteristics.

There's also a lot of growth that goes along with being an artist, both on the creative side and as it relates to personal development, since artist's work through their ideas, often in great detail, and practice. This will stifle so much of that. The art that we create and consume has an influence on us mentally and emotionally and shapes our worldview, and just the awareness of some of the things I've mentioned is already having an effect.

Movie studios will start using AI to write scripts instead of buying the rights from authors and hiring screenwriters. The products will be set up around psychological profiles and the interests of the population, which there are endless data points on, to create something that will draw in as many people as possible.

There might be some instances where it's a useful tool and not a bad thing, but most of the time it will be a shortcut to nowhere. Yes, there will still be organic art, but a lot of the time it will be difficult, if not impossible, to discern between what's real and what isn't, and gone will be the days of naturally gifted and thoughtful artists like David Bowie, Bill Watterson and Rod Serling since they will never be able to compete.

One can only hypothesize about how this sort of thing will play out, but I imagine it won't be for the best. Since AI isn't going away any time soon, the best we can do is talk and figure out how to navigate through the next point 0 of the digital age.

A fair chuck of humanity is going to struggle when faced with the fact of art going the same way as chess etc as somethign special about humans abilities.

It's also a part of all the jobs going. Even the famous actors being reproduced will get replaced with much more easily owned/controlled totally fictional celebrity characters.

A fair chuck of humanity is going to struggle when faced with the fact of art going the same way as chess etc as somethign special about humans abilities.

It's also a part of all the jobs going. Even the famous actors being reproduced will get replaced with much more easily owned/controlled totally fictional celebrity characters.

But there is something special about human abilities when it comes to both. When you remove people from the equation, it gets boring. Magnus Carlsen is more impressive than AlphaZero because the [individual] human element is still there.

Yes the spectacle gets boring when they're too good at it. Even humans who are two professional get called boring or robotic.

But the product (chess game, art) is better. When it comes to personalities with all their flaws and flourishes, the fictional characters will also display them.

With all these things people will protest but they wont be able to tell the difference. As Douglas Adams said in a slightly different context

Many people now say that the poems are suddenly worthless. Others argue that they are exactly the same as they always were, so what’s changed? The first people say that that isn’t the point. They aren’t quite certain what the point is, but they are quite sure that that isn’t it.

My guess is that none of the people who created AlphaZero could beat Magnus in an actual game. What does that say?

It tells us what we are should already know. That AI etc isn't limited by what we can program it to do.

I had this concert recorded on VHS and watched the **** out of it. Fun story:

Right around this time, I was at a Guitar Center looking to a buy a bass. I started playing guitar a year or so earlier, but after hearing "Longview," I wanted to switch. Anyway, I was familiar with the bass Mike from Green Day played. It was a Gibson Ripper, and I wanted the same one. They hadn't been made since the 70s or 80s, so when I noticed one at Guitar Center, imagine my surprise. It was sitting next to the counter. I walked over, picked it up and started playing. Before long, an employee came up and took it away. He told me it wasn't for sale and that it belonged to the bass player from Green Day. They were setting it up and sending it back to the band. He probably had ten of them, but I still think it's cool that I got to play one of his basses.

Another turning point, a fork stuck in the road

Time grabs you by the wrist, directs you where rick rolls

So make the best of this test, and don't AI

It's not a question, but a lesson in AI

It's something unpredictable

But in the end, AI

I hope you had the time of your life

That will change so fast if computer storage/power is the constraint. It's a prolem that already has a solution. The ida that the characters wont generate dialogue in charachter rather than have it prerecorded is kinda odd grasping on to the past.

It's why I think you massively overestimate the computer resources required

and seriously lol at the idea we are near the end of silicon capacity (even if we dont find anything else) wtf?

I mentioned prerecorded dialogue solely to point out how far current games are what I am describing.

Something I wrote a few years ago:

There's been a lot of reporting and discussions lately around artificial intelligence (AI) and it's potential dangers and downfalls, but one aspect that almost never gets brought up is how it's going to affect creativity and art. AI is going to be used to generate music, movies, books and visual art.

I mentioned this exact point in the OP of this thread.

The latest and greatest chess computers weren't taught anything about chess by their creators.

well one important thing was taught, what defines winning.

At the end what Alpha-0 has shown us is that if the definition of success can be 100% objectively described in absolutely non-ambigous ways, and the set of choices is limited by clear boundaries, AI can become better than the best human (and than the best model taught human intuitions about the game).

Which is great but very soon found major practical limitations. Alpha-fold is probably more important because it shows it's not about games, rather about being able to 100% define success and failure.

Which is why we should be close to AI reading radiographies better than the best specialists, if we aren't there already.

It's curious because the most glaring example of success in AI aren't LLMs but everyone these days almost exclusively talks about LLM

Creators certainly don't need to define winning for a chess computer. Whether they did so as a matter of pure expedience I don't know. If you fed a bunch of previously played games into a chess computer, it would be trivially easy for the computer to figure out both the rules of chess and what defines winning.

Yes although you do have to some way of determining which side won. You can't tell just by seeing the games played

re the general point. success doesn't need to be 100%. Any fitness fucntion can work. The main one being survival.

[QUOTE=Luciom;58848967]

Creators certainly don't need to define winning for a chess computer. Whether they did so as a matter of pure expedience I don't know. If you fed a bunch of previously played games into a chess computer, it would be trivially easy for the computer to figure out both the rules of chess and what defines winning.

Nono that's not what the alphazero algorithm does

It didn't learn from previously played games.

It was told "this is what we define winning in this game, try to minimize the chances of it happening for the other side".

It played against itself trying to minimize the chances of losing on either side, trying all moves randomly at first then learning what works and what doesn't in every position.

But he needed a target to achieve, objectively defined, that's the clue. Games provide that systematically, and you can apply that approach only to situations in real life where you can define objectively your goal with no ambiguity

Yes although you do have to some way of determining which side won. You can't tell just by seeing the games played

re the general point. success doesn't need to be 100%. Any fitness fucntion can work. The main one being survival.

100% is about the definition of the end goal. You need a purely defined objective endgoal

Survival is a self end goal. Evolution does very well and it's the same for fitness based evolutionary AI systems.

The fitness fucntion partly determines what survives.

Yes although you do have to some way of determining which side won. You can't tell just by seeing the games played

re the general point. success doesn't need to be 100%. Any fitness fucntion can work. The main one being survival.

Right. And if you allowed the computer to see a bunch of games that played all the way to checkmate, with an indication of which side won, I'm sure it would be able to figure out what was required in order to win.

[QUOTE=Luciom;58848967]

Creators certainly don't need to define winning for a chess computer. Whether they did so as a matter of pure expedience I don't know. If you fed a bunch of previously played games into a chess computer, it would be trivially easy for the computer to figure out both the rules of chess and what defines winning.

Nono that's not what the alphazero algorithm does

It didn't learn from previously played games.

It was told "this is what we define

I didn't say that was what, in fact, was done. I was speculating that it would have been trivially easy for a chess computer to figure out the rules and the definition of winning if you trained it on a bunch of previously played games.

The latest and greatest chess computers weren't taught anything about chess by their creators.

well one important thing was taught, what defines winning.

At the end what Alpha-0 has shown us is that if the definition of success can be 100% objectively described in absolutely non-ambigous ways, and the set of choices is limited by clear boundaries, AI can become better than the best human (and than the best model taught human intuitions about the game).

Which is great but very soon found major prac

I agree that defining winning (or success) is critical. It's just really easy to do with chess as compared to, say, healthcare.