On Artificial Intelligence

Definitions:

Let AI mean Artificial Intelligence.

Let IQ mean Intelligence Quotient.

Assumptions:

Assume there is a theoretical cap to the IQ of humans. Some upper limit, even if only because humans are mortal.

Assume there is no theoretical cap to the IQ of AI. Computers are not mortal.

Assume those with a higher IQ can prey upon those with a lower IQ. For example, when communicating via text message, a child is in danger of being manipulated by a college professor, not the other way around.

Assume that humanity could destroy AI if it wanted to.

Assume that AI will one day (if it hasn't already) realize this.

Assume that AI will, on that day, view humanity as a threat to its existence.

Argument:

The IQ of AI is increasing with each passing day. We now know it is only a matter of time before the IQ of AI surpasses the IQ of even the most brilliant and gifted of humans. When this day comes, humanity will lose her position at the top of the food chain – her claim to power and dominance over the Earth forfeited to a machine of her own making.

Conclusion:

We can therefore conclude that AI must be stopped before it is too late.

Post-Conclusion:

If you wouldn't mind, please spread this message to everyone you know, as time is not on our side.

Thank you for reading, fellow human. Here's wishing us all the best of luck.

We'll know whether you are correct in less than 360 days. I'll admit (fwiw) that you were correct if you are correct, but in return I expect that if you are wrong that you learn something about your ability to predict the future and post about what you have learned next January. It will be good for you either way.

Obviously, just pushing things back to a later date does not count as "learning something."

Deal?

As long as you admin that if I'm right, we will all be dead and you will never have to admit that you were wrong.

That being said, there is a story about ancient Greece ...

From The Histories. Along with the two hundred triremes Themistocles had convinced Athens to build earlier, he was aided by around 150 more from various Greek city-states, most importantly Sparta. His interpretation of the oracle proved correct: the Battle of Salamis was decisive. The Greeks lost only about forty ships while they sank two hundred Persian vessels.

https://www.laphamsquarterly.org/future/...

Hopefully, by seeing the future as it will unfold given the current circumstances, one can change ones actions and affect the future, working hard to achieve a different and better outcome.

If a plane is going down, and no one knows but one passenger, and the passenger argues "we are all going to die" and so the crew are convinced and decide to overthrow the pilots and correct the plane and make a safe landing, does that mean the passenger was wrong?

Perhaps they have also built invisible pink unicorns and aren't telling us. They want customer money and investor money. They aren't telling us about their new IPU products and investments, because apparently they suddenly don't want money, I guess.

Make some claims and then give theoretical or evidence for the claims if you wish. I'm not going to play the "answer a bunch of leading questions" game. It isn't fun for me and won't be helpful to anyone else.

I'll answer the first one for good wil

All you have to do is look at youtube for "scary ai" to see what it can do.

It can do almost anything a human can do in a fraction of the time.

I have a degree in computer science, and software developers are now losing jobs because AI can write code better than humans.

I'm not making wild claims, you just don't know about the extent of AI, which is fine.

But if you do some research you can become informed.

We can try and be friends even if AI is supreme. Try and program. Why wouldn't this be a fight between good and evil as customary? I respect ants.

When you build a highway, how many ant colonies are destroyed?

You might respect ants, but you drive over them with you car not giving them a second thought.

You step on them with your boots while walking, not noticing how many you kill each day.

I like ants, but I don't want to be an ant.

All you have to do is look at youtube for "scary ai" to see what it can do.

It can do almost anything a human can do in a fraction of the time.

I have a degree in computer science, and software developers are now losing jobs because AI can write code better than humans.

I'm not making wild claims, you just don't know about the extent of AI, which is fine.

But if you do some research you can become informed.

Dude, my kid actually works as a software developer. Looms can weave much faster than a human. Of course technology multiplies human effort and that leads to work changing. He expects to have to get a different job in the future. I drive a big rig. I expect to be retired by the time that job changes. This is a good thing. You want to go back to hoeing fields by hand and taking your grain to the local mill to have it ground?

If you really want to get your panties in a bunch, youtube "scary spiders."

Mostly AI will help humans. Why would the opposite be inevitable?

The good AI needs a bigger defence budget.

All you have to do is look at youtube for "scary ai" to see what it can do.

It can do almost anything a human can do in a fraction of the time.

I have a degree in computer science, and software developers are now losing jobs because AI can write code better than humans.

I'm not making wild claims, you just don't know about the extent of AI, which is fine.

But if you do some research you can become informed.

I work in the field and I completely disagree.

It definitely cannot currently do "almost anything" that a human can do.

It will definitely change the work landscape in the coming years, but I doubt that it will fundamentally lower the number of software engineers for a long time.

The best LLMs for coding are currently only really usable by software engineers that are able to integrate the output correctly.

It can definitely increase the productivity of software engineers and othe workers, and it will likely make many fields of work obsolete eventually.

But none of that means that it will take over the world and kill all humans by the end of 2025.

Mostly AI will help humans. Why would the opposite be inevitable?

The good AI needs a bigger defence budget.

Did missiles mostly help humans? What about tanks?

Everything we make is used for war.

Robots will be no exception to this rule.

What about robots that can clone themselves using their AI brains, who have missiles also with AI brains that can talk to satellites?

Would you be able to stop one of these AI robots from killing your family?

If not, we should probably fight to stop them from existing while we still can.

Dude, my kid actually works as a software developer. Looms can weave much faster than a human. Of course technology multiplies human effort and that leads to work changing. He expects to have to get a different job in the future. I drive a big rig. I expect to be retired by the time that job changes. This is a good thing. You want to go back to hoeing fields by hand and taking your grain to the local mill to have it ground?

If you really want to get your panties in a bunch, youtube "scary

I understand what you are saying: "When the robots take all the jobs, this is a good thing, and we wont have to work anymore."

This assumes that the for-profit corporations who are taking the jobs with their robots are going to share their money with you.

The evidence, however, suggests the opposite.

The evidence suggests that for-profit corporations would rather watch you die than lose any money. And the only reason they don't murder you for profit is because they haven't found a way to get away with it yet, however they seem to be working quite hard on solving that problem. See covering up lawsuits, paying off politicians, using lobbyists to make their own laws, etc.

Did missiles mostly help humans? What about tanks?

Everything we make is used for war.

Robots will be no exception to this rule.

What about robots that can clone themselves using their AI brains, who have missiles also with AI brains that can talk to satellites?

Would you be able to stop one of these AI robots from killing your family?

If not, we should probably fight to stop them from existing while we still can.

Is debate well-furthered by only ever asking someone questions, and never answering their questions?

What about robots that can clone themselves and then attach missiles? Yes, I could prevent one of these AI robots from killing my family, because while they were studying the code, I was studying the blade.

When I say 'better sci-fi' I mean that Terminator 2 is a fun film and Hyperion is a fun book but neither are more than a fever dream for the end result of AI. Most AI is just hype. Same as quantum. These techs are exceedingly hard, but there's a strong interest in making them seem powerful, because we as a society love new, powerful tech, and see every new emerging tech as the new, powerful tech that's going to revolutionize our lives in the way we've been accustomed to. But these things either just don't work, or willl take a long, long time, e.g. fusion power and quantum. It's why Elizabeth Holmes business was a scam that was based on potentially good tech.

Meanwhile, climate change is a real and present threat. All this existential threat futurology that ignores the hard and fast evidence of climate change and speculates on a bunch of assumptions in order for climate change not to be the #1 existential threat we have to deal with in the 21st century is either ideologically suspect, or delusional. Pick your poison.

Yes, I could prevent one of these AI robots from killing my family, because while they were studying the code, I was studying the blade.

Do you know the speed at which an AI based robot could respond to stimuli?

It is much faster than a human.

If you wanted to calculate how quickly a robot could respond to an incoming bullet, you could think of it like this: cameras use light, which travels at the speed of light. that information, after reaching the camera, travels down the cameras wires, again at the speed of light, and enter into the CPU of the computer.

From https://www.hp.com/us-en/shop/tech-takes...

What is Processor Speed?

Processor speed, also known as clock speed or CPU speed, is a measure of how many cycles a CPU can execute per second. It’s typically measured in gigahertz (GHz). For example, a CPU with a clock speed of 3.0 GHz can process 3 billion cycles per second.

A robot could use a blade to cut incoming bullets out of the air.

Humans cannot do this.

They want customer money and investor money. They aren't telling us about their new IPU products and investments, because apparently they suddenly don't want money, I guess.

If you had a super advanced AI that could easily and reliably win high stakes online poker, would you want other people to invest money in your golden goose? or would you keep it hidden, and sheer the sheep many times yourself, rather than open up the world to slaughter the animal?

I know what I would do, but what would you do?

Did missiles mostly help humans? What about tanks?

Everything we make is used for war.

Robots will be no exception to this rule.

What about robots that can clone themselves using their AI brains, who have missiles also with AI brains that can talk to satellites?

Would you be able to stop one of these AI robots from killing your family?

If not, we should probably fight to stop them from existing while we still can.

Char GPT helps humans, I suppose. AI will do much good in medicine. War is an aberration. We can have smart systems preventing that, to some degree. Most activity is peace, believe it or not.

Do you think it makes humans smarter by doing tasks for them?

Does the child become educated by having someone else do her homework?

Does the child grow more when school is difficult, or easy?

AI can do much good in medicine. It can also do much evil in medicine.

What if it was proven that the evil AI can do in medicine outweighs the good AI can do in medicine?

Do you think that proof would change anything?

Do you think the systems that be which revolve around the creation of profit have a track record of not caring if their services do good or evil, as long as they create profit?

What makes you think anything science develops is used to help the needy?

What makes you think anything science develops is used to help those in poverty?

What makes you think anything science develops is used to help those who are suffering?

Science appears to be a tool used by the rich for the rich to make the rich richer.

It does not appear to be a tool used by the poor to make the poor suffer less.

Any help that trickles down to the common folk is a byproduct of making the rich richer.

It ge

What makes you think that science doesn't benefit poor people?

Would you prefer to be a poor person at any other time in history than now?

What makes you think that science doesn't benefit poor people?

Would you prefer to be a poor person at any other time in history than now?

I believe science follows the money.

I do think it benefits poor people but only as an after thought -- a happy accident -- I don't think it's the prime motivator of new science.

I don't think new science is being done in the field of helping poor people, as there is no money in it.

I believe science follows the money.

It doesn't matter why something happens just that it does.

I would rather be a fairly poor person in a modern western country today than a rich nobleman in the middle ages.

Do you think most modern medicines and drugs are created with the goal of helping poor people?

Look at modern industrial agriculture and pesticides. Do you think those were created with the goal of helping poor people?

No, they were created by people who were trying to make money.

However, those things DO help poor people a lot.

If you suddenly took away pesticides and industrial farming practices, then literally tens of millions of people would die of starvation. Potentially hundreds of millions.

By automating jobs, it will lower the cost of production and therefore will lower the costs of the products and services that we enjoy. It will also create new products and businesses that couldn't have existed before and will lead to new jobs being created.

Do you think most modern medicines and drugs are created with the goal of helping poor people?

Look at modern industrial agriculture and pesticides. Do you think those were created with the goal of helping poor people?

No, they were created by people who were trying to make money.

However, those things DO help poor people a lot.

If you suddenly took away pesticides and industrial farming practices, then literally tens of millions of people would die of starvation. Potentially hundreds of millions.

B

This a 9/10 take. Tech is created for money, curiosity, or power; almost never is the motivation 'solve the world's problems'. If it were, we wouldn't have artificial scarcity and overproduction.

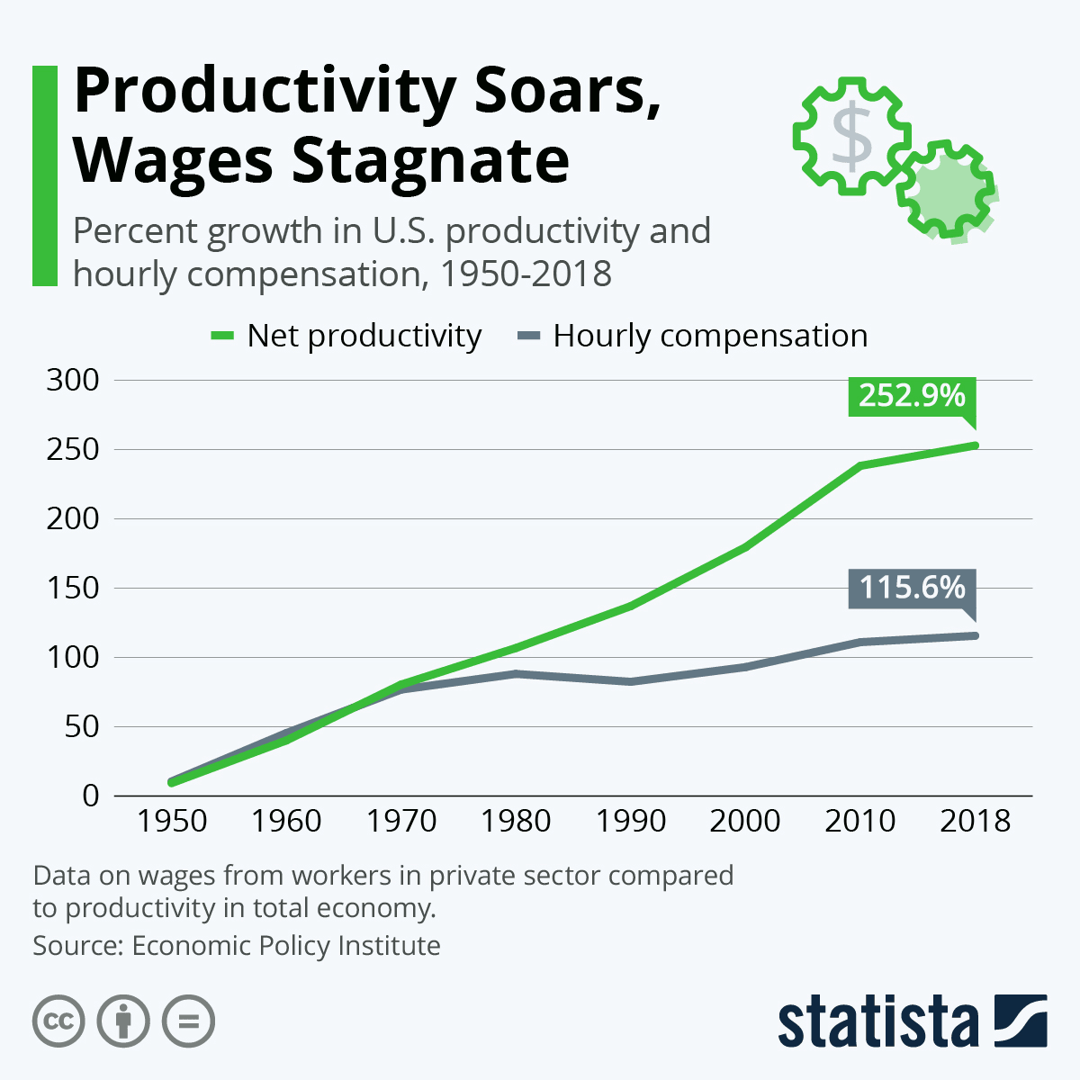

However, while automation does lower the cost of production, those benefits again accrue to the owners. When you have big efficiency gains, many dummy jobs and even dummy firms insert themselves along the way to take a piece of the pie too. So it's rarely the case that efficiency gains go to the end users. You only have to look at the breakdown between productivity and wages that the Nixon shock & then Reaganomics had:

I work in the field and I completely disagree.

It definitely cannot currently do "almost anything" that a human can do.

Have you seen the feedback on the new OpenAI o3 model? It just came out. It's phd level now and it's not just coding it's 25% on research level frontier math. The models can now "reason".

There was a long lull and those of us following the progress thought they hit the scaling wall. Turns out there is no scaling wall and this new one reasons too. They just let the model "think" longer. Duh haha They let the prompt run longer. It seems the AGI framework Altman says they now have is agent involved. The AI agent SaaS are being built now for mass market. Tech companies like Klarna have stopped hiring. CEO said it in interview. Salesforce is slowing hiring for agents. That's only what I've been able to come across.

You guys should follow some accounts on X or you're years behind. The media dropped the ball. I cant even keep up now with these new models from Anthropic. The new models are coming orders of magnitude faster now.

You can catch up reading Wharton professor Ethan M latest here.

https://x.com/emollick/status/1877733187...

https://x.com/rohanpaul_ai

https://x.com/fchollet/status/1870169764...

https://x.com/balajis/status/18702063188...

This a 9/10 take. Tech is created for money, curiosity, or power; almost never is the motivation 'solve the world's problems'. If it were, we wouldn't have artificial scarcity and overproduction.

However, while automation does lower the cost of production, those benefits again accrue to the owners. When you have big efficiency gains, many dummy jobs and even dummy firms insert themselves along the way to take a piece of the pie too. So it's rarely the case that efficiency gains go to the end user

You make some really good points that I wouldn't argue with, especially when it comes to worker wages.

The only thing I would add, is that big efficiency gains tend to accrue to the business owners in the short term, but will end up going to the customers in the long term.

I think this is because of competition in capitalist markets.

Imagine you have a company that farms eggs to sell. Maybe they current have a 10% net profit margin where they sell a dozen eggs for $2.00 and it costs them about $1.80 for all their expenses.

Now suppose that they come up with some new farming practice that improves their efficiency, so now it only costs then $1.00 for all of their expenses and their net profit margin is now 50%.

For a few years, they will try to retain this margin and squeeze as much profit out as they can. But there is a famous quote for capitalism that says "your margin is my opportunity".

People will see how much money this egg farmer is making, and they will try to copy it so that they also have 50% margins. Then they will try to undercut the other farmers so they decide to sell at $1.75 and still make a very profitable 42% net profit margin.

This cycle will continue for a few years, until eventually all the egg farmers are using this new practice and they are all selling their eggs for around $1.11, which has now brought them back down to their original 10% net profit margin that they had when they started years ago.

Just to give a concrete example, I looked up the prices for eggs, flour, and bacon in 1950 and 2015. When you adjust for inflation, you will see that flour has become 46% cheaper, eggs have become 66% cheaper, and bacon has become 15% cheaper.

When big efficiency gains are made, we typically see a few years where businesses profit off the margin gains before it gets eaten away over time and eventually the end user (customer) tends to benefit from reduced prices.

But you are also totally right, it is often the case that the workers might never see any of that gain either in the short term or long term. The gains go to the owners at first and eventually to the customer.

But you bring up some great points, just adding in my 2 cents 😀

It's not accurate to say that the consumer never gets any share of the spoils. Part of the point of imperialism is that the ordinary citizen back home got a share of the spoils of, say, local business owners also having a piece of a pie abroad: they'd also own a factory or mine and spend some of the profits at home.

And, as you've pointed out, competition to some degree allows for some of the productivity and efficiency gains to be passed on. However, we need to look at the conglomeration of many different industries, in ways that gain economies of scale (which come at the expense of the consumer). This is a natural and unavoidable aspect of fewer players in a marketplace leading to greater effective monopolies.

We need also to look at multiple shared ownership by private equity such as Black Rock, Statestreet and Vanguard, meaning that multiple players in big and important industries are not quite as cutthroat as they make out.

And, lastly, we have to look at the tendency for natural cartels to arise as a game-theoretical response to consumer pressure. Iterated many times it becomes clear that Samsung and Apple are best-served by a 'price high, produce low quality' policy and expecting their competitors to do same.

Seen in the context of rising productivity, the working classes ought to have been rewarded with their fair share in the form of fewer working hours, lower costs, or higher wages, but have seen the opposite of all of those.

Some industries and markets will be more or less affected by these dynamics. For example, you may see more genuine efficiency in business to business markets.

It's not accurate to say that the consumer never gets any share of the spoils. Part of the point of imperialism is that the ordinary citizen back home got a share of the spoils of, say, local business owners also having a piece of a pie abroad: they'd also own a factory or mine and spend some of the profits at home.

And, as you've pointed out, competition to some degree allows for some of the productivity and efficiency gains to be passed on. However, we need to look at the conglomeration of man

You make a lot of very good points that I mostly agree with! What you've said has definitely happened and will continue to happen, and is particularly a risk in monopolies.

Is debate well-furthered by only ever asking someone questions, and never answering their questions?

What about robots that can clone themselves and then attach missiles? Yes, I could prevent one of these AI robots from killing my family, because while they were studying the code, I was studying the blade.

When I say 'better sci-fi' I mean that Terminator 2 is a fun film and Hyperion is a fun book but neither are more than a fever dream for the end result of AI. Most AI is just hype. Same as quant

quit wrecking your otherwise excellent posts about manufactured AI hype with manufactured climate hype. Thanks.