A thread for unboxing AI

The rapid progression of AI chatbots made me think that we need a thread devoted to a discussion of the impact that AI is likely to have on our politics and our societies. I don't have a specific goal in mind, but here is a list of topics and concerns to start the discussion.

Let's start with the positive.

1. AI has enormous potential to assist with public health.

Like all AI topics, this one is broad, but the short of it is that AIs have enormous potential when it comes to processing big data without bias, improving clinical workflow, and even improving communication with patients.

https://www.forbes.com/sites/bernardmarr...

In a recent study, communications from ChatGPT were assessed as more accurate, detailed, and empathetic than communications from medical professionals.

https://www.upi.com/Health_News/2023/04/...

2. AI has enormous potential to free us from the shackles of work.

OK. That's a overstatement. But the workplace-positive view of AI is that it will eventually eliminate the need for humans to do the sort of drudge work that many humans don't enjoy doing, thereby freeing up time for humans to engage in work that is more rewarding and more "human."

3. AI has enormous potential to help us combat complex problems like global warming.

One of the biggest challenges in dealing with climate issues is that the data set is so enormous and so complex. And if there is one thing that AIs excel at (especially compared to humans), it is synthesizing massive amounts of complex data.

https://hub.jhu.edu/2023/03/07/artificia...

***

But then there is the negative.

4. AI may eliminate a lot of jobs and cause a lot of economic dislocution in the short to medium term.

Even if AI eliminates drudgery in the long run, the road is likely to be bumpy. Goldman Sachs recently released a report warning that AI was likely to cause a significant disruption in the labor market in the coming years, with as many as 300 million jobs affected to some degree.

https://www.cnbc.com/2023/03/28/ai-autom...

Other economists and experts have warned of similar disruptions.

https://www.foxbusiness.com/technology/a...

5. At best, AI will have an uncertain effect on the arts and human creativity.

AI image generation is progressing at an astonishing pace, and everyone from illustrators to film directors seems to be worried about the implications.

https://www.theguardian.com/artanddesign...

Boris Eldagen recently won a photo contest in the "creative photo" category by surreptitiously submitting an AI-generated "photo." He subsequently refused to accept the award and said that he had submitted the image to start a conversation about AI-generated art.

https://www.scientificamerican.com/artic...

https://www.cnn.com/style/article/ai-pho...

Music and literature are likely on similar trajectories. Over the last several months, the internet has been flooded with AI-generated hip hop in the voices of Jay-Z, Drake and others.

https://www.buzzfeed.com/chrisstokelwalk...

Journalist and author Stephen Marche has experimented with using AIs to write a novel.

https://www.nytimes.com/2023/04/20/books...

6. AI is likely to contribute to the ongoing degradation of objective reality.

Political bias in AI is already a hot-button topic. Some right-wing groups have promised to develop AIs that are a counterpoint to what they perceive as left wing bias in today's modern chatbots.

https://hub.jhu.edu/2023/03/07/artificia...

And pretty much everyone is worried about the ability of AI to function as a super-sophisticated spam machine, especially on social media.

https://www.vice.com/en/article/5d9bvn/a...

Little wonder, then, that there is a broad consensus that AI will turn our politics into even more of a misinformation shitshow.

https://apnews.com/article/artificial-in...

7. AI may kill us, enslave us, or do something else terrible to us.

This concern has been around ever since AI has been contemplated. This post isn't the place to summarize all the theoretical discussion about how a super-intelligent, unboxed AI might go sideways, but if you want an overview, Nick Bostrom's Superintelligence: Paths, Dangers, Strategies is a reasonable summary of the major concerns.

One of the world's leaders in AI development, Geoff Hinton, recently resigned from Google, citing potential existential risk associated with AI as one of his reasons for doing so.

https://www.forbes.com/sites/craigsmith/...

And there have been numerous high-profile tech billionaires and scientists who have signed an open letter urging an immediate 6 month pause in AI development.

https://futureoflife.org/open-letter/pau...

8. And even if AI doesn't kill us, it may still creep us the **** out.

A few months ago, Kevin Roose described a conversation he had with Bing's A.I. chatbot, in which the chatbot speculated on the negative things that its "shadow self" might want to do, repeatedly declared its love for Roose, and repeatedly insisted that Roose did not love his wife.

https://www.nytimes.com/2023/02/16/techn...

Roose stated that he found the entire conversation unsettling, not because he thought the AI was sentient, but rather because he found the chatbot's choices about what to say to be creepy and stalkerish.

I should add that Jimmy cannot scupper us by purposely picking a number with 4 distinct digits (our worst case) because he does not know our encoding scheme in advance. For example, if we choose to allocate 1 to small and 2 to large the resultant number may end up with 3 distinct digits, but if we do it vice versa it might end up with 2 distinct digits. In repeated trials we would randomise our encoding scheme each time so that Jimmy can't infer it from our prior guesses. No shenanigans for Jimmy.

There is an additional complexity that I'm pretty sure you are not accounting for. Jimmy raises his hand regardless of whether a selection hits on one, two, or three of his preferred attributes. (If you hit all four, he of course just says that you win.)

There is an additional complexity that I'm pretty sure you are not accounting for. Jimmy raises his hand regardless of whether a selection hits on one, two, or three of his preferred attributes. (If you hit all four, he of course just says that you win.)

Not sure why you think I'm not accounting for it?

Not sure why you think I'm not accounting for it, the whole solution is predicated on this being the case!

Never mind. I misread what you wrote. My gut tells me that Case D isn't optimized.

For example, if Jimmy doesn't raise his hand on your first random selection, doesn't that give you additional information that you can use to exclude some of the remaining 23 combinations from which you are choosing at random.

Never mind. I misread what you wrote. My gut tells me that Case D isn't optimized.

For example, if Jimmy doesn't raise his hand on your first random selection, doesn't that give you additional information that you can use to exclude some of the remaining 23 combinations from which you are choosing at random.

Optimising case D doesn't add a huge amount to our overall win % as every % we gain here is a % of 24/256. If we could optimise case C down to a 1 in 2 pick from a 1 in 3 pick that would be massive as we would go from 1/3 of 144/256 to 1/2 of 144/256 for a 24% improvement overall. So if I were looking to optimise, I'd definitely take a look at case C a lot more closely.

There is probably some algorithm we can come up with to optimise the worst case for case D. I think finding it is going to involve a fair amount of trial and error.

I'm not sure that you can guarantee there will be at least one hat for which he doesn't raise his hand in the first 5 picks. When he raises his hand, we gain no additional information. So if that's the case, we can't optimise the worst case scenario, which is what I've calculated. We might be able to optimise the best case & average case, but those require additional calculations anyway.

Say he is thinking 0123

We pick 0213 he raises his hand

We pick 1203 he raises his hand

we pick 2103 he raises hi

If the solution is 0123, and your first guess is 3210, he won't raise his hand, at which point you can eliminate all remaining combinations that have 3 as the first digit, 2 as the second digit, 1 as the third digit, or 0 as the fourth digit. Right?

If the solution is 0123, and your first guess is 3210, he won't raise his hand, at which point you can eliminate all remaining combinations that have 3 as the first digit, 2 as the second digit, 1 as the third digit, or 0 as the fourth digit. Right?

Yeah I ****ed that up. I edited my post, was hoping you hadn't started replying to it, too late!

But also keep in mind that all our calculations here are worst case. So we have to assume that we go down the unhappy path, assuming that we hit the jackpot and he doesn't raise his hand with the first number we pick is invalid for this calculation.

We do gain additional information when he raises his hand though, which is where I ****ed up. If we pick 0213 and he raises his hand, we know that the first digit is a 0 and/or the second digit is a 2 etc. which is more than we knew beforehand. Designing an algorithm around this is possible I'm sure, but would take a fair amount of tinkering.

(1)4/256 + (1)84/256 + (1/3)(144/256) + (1/4)(24/256) = 55.5%. We (as the group of 10) can take an even money bet on this game and win.

Yeah, after doing some scratch match, I am convinced that the bolded coefficient for Case D is very wrong, and almost certainly much closer to 1 than 1/4. You alluded to the reasons.

Once you start using your remaining six guesses, you are able to rapidly eliminate combinations, regardless of whether Jimmy raises his hand. If, on your first guess, Jimmy doesn't raise his hand, then you can eliminate 14 of the remaining 23 combinations (i.e., all remaining combinations that have one digit in the same place as your guess). If Jimmy does raise his hand, then you can eliminate 9 of the remaining 23 combinations (i.e., all combinations that do not have any digits in the same place as your guess). And this ability to eliminate combinations continues with each successive guess, regardless of whether Jimmy raises his hand. You will never eliminate as many combinations on successive guesses as you do on the first guess, but as you eliminate combinations over six iterations, you dramatically increase your chances of binking the correct answer.

I haven't thought about Case C yet.

Fair enough. I approached it as I would a complex problem at work, essentially: design an overarching framework that allows us to split the problem into sub-tasks, implement an initial solution for each task that's "good enough", then optimise each sub-task individually as necessary. After all, the initial question wasn't "what's the answer?", it was "how would you calculate the answer?" I would consider the base 4 encoding + 4 initial guesses the "framework", which then allows us to optimise each case individually.

Note that D in total contributes less than 10 points to our overall win %, so even if we manage to find a perfect algorithm for D we won't be increasing our overall "performance" by more than 7.5%. If I were solving this problem for practical reasons I'd definitely be focusing on optimisations for case C as that's where the big wins are, but I understand that from a theoretical standpoint finding the algorithm for case D may be more interesting.

For Case C, the coefficient is also far too low because, as you mention, you are taking the worst case scenario when using the masking method to uncover each of the first three digits. I also want to give some more thought to whether the masking method is the most efficient strategy, even as you move to the second and third digit. It may not be, in part of because of our ability to eliminate combinations even without using a masking method (as in Scenario D), and in part because each time you use the masking method, you deprive yourself of an opportunity to simply bink the answer by guessing a combination that conceivably could be correct.

I am relatively certain that, over the entire problem, optimal strategy will yield a better than 75% chance of picking Jimmy's hat.

For Case C, the coefficient is also far too low because, as you mention, you are taking the worst case scenario when using the masking method to uncover each of the first three digits. I also want to give some more thought to whether the masking method is the most efficient strategy, even as you move to the second and third digit. It may not be, in part of because of our ability to eliminate combinations even without using a masking method (as in Scenario D), and in part because each time you

Agreed with the above. More generally, I don't know how to a) find a "good" algorithm or b) prove that a given algorithm is or isn't the optimal algorithm. The algorithms I provided were my best guesses. It's likely that even case B is not optimised, it just gets us to 100% within the required number of guesses, so it doesn't need to be. Once the framework is in place, it essentially becomes a pure algorithmic optimisation problem, like writing an efficient sort for example.

More generally, I don't know how to a) find a "good" algorithm or b) prove that a given algorithm is or isn't the optimal algorithm.

I have the same issue.

It's likely that even case B is not optimised, it just gets us to 100% within the required number of guesses, so it doesn't need to be.

I didn't even consider optimization for Case B because it didn't matter for the purposes of my question.

If you manage to get e d'a to have a look at this, he might well be able to come up with something better than I did. He seems to know a lot about comp sci and algorithmic complexity. I haven't studied anything like that in depth, so there are probably both theoretical and practical approaches to optimisation problems like this that I just have never learnt about.

For Case C, the coefficient is also far too low because, as you mention, you are taking the worst case scenario when using the masking method to uncover each of the first three digits.

Just on this point - when evaluating algorithmic efficiency, you usually have a best case, worst case and average case statistic for a given algorithm. Our best case is trivially 100%, that seems easy enough - we can get lucky with the first pick, or we can get lucky and hit Case A, or a bunch of other things can happen. The worst case is what I've been trying to caclulate, and it gives us a lower bound for our expected success rate on repeated trials. The average case seems like it would be more difficult to calculate, as you need to take the worst case paths and all the other paths and somehow average them out. That seems very daunting. But I believe that would give us our actual expected success rate, not just the lower bound for it.

Usually with puzzles like this though, like "what's the minimum number of weighings needed to find the fake coin" etc, you are looking for the worst case scenario.

And finally - we don't really even know that the strategy of initially guessing the four repdigits first is optimal. There could well be other strategies for some number of first guesses that allow us to come up with a method of subsequently categorising cases different from which distinct digits they contain. Maybe if there is a method of initially guessing such that the subsequent cases are symmetrical, e.g. case 1 is 0-63, case 2 is 64-127 etc. and then we can have one algorithm that works for all the cases. Guessing repdigits to start was quite honestly just the first thing that came to mind.

Actually, in case C by guess 9 we have 2 guesses left for 5 numbers, so rather than ****ing about isolating digits we can take the 2 in 5 shot by picking 9 and 10 at random which is already better than 1/3. This brings our lower bound up to 59.22%.

I have an improvement on the algorithm.

Use the first 2 guesses to guess 0000, 1111. Proceed case by case. (U = Hand up, D = Hand down)

Case A: D,D (16 combos). This case is trivial, there are 16 combos which contain only 2,3. This can be solved easily with 8 guesses using the masking method or probably a bunch of other methods.

Case B: U,D or D,U (130 combos). I have an algorithm using a variation of the masking method which solves these 100% of the time (I think!). I'll post it if this line proves fruitful, it's a little finnicky.

Case C: U,U (110 combos). Haven't thought about this yet.

This gives us (1)16/256 + (1)130/256 + (?)(110/256) = 57% + X. X Has to be at least (1/8)(110/256) so this already gets us to 62.4% before we start optimising case C.

Following this line, it becomes a question of solving case C (numbers containing both 0 and 1, no other information available) in 8 guesses.

The Nobel Prize in Physics has been awarded to two scientists, Geoffrey Hinton and John Hopfield, for their work on machine learning.

British-Canadian Professor Hinton is sometimes referred to as the "Godfather of AI" and said he was flabbergasted.

He resigned from Google in 2023, and has warned about the dangers of machines that could outsmart humans.

The announcement was made by the Royal Swedish Academy of Sciences at a press conference in Stockholm, Sweden.

American Professor John Hopfield, 91, is a professor at Princeton University in the US, and Prof Hinton, 76, is a professor at University of Toronto in Canada.

https://www.bbc.co.uk/news/articles/c62r...

Not sure it's really physics but ...

Bump:

Can anyone who understands programming/AI better than me walk through exactly how one "teaches" AI to be an ideological bad faith actor.

And question for everyone, does anyone see how this can be problematic? Elon Musk is very adamant that teaching/prompting AI to lie for any reason is a very bad idea; but maybe he is just crazy and over-reacting and it is no biggie. I dunno.

My theory is that the AI get’s a lot of “Trump” “Assassination” queries and doesn’t want to be the accessory to a real assassination m

Speaking of AI, this Destiny stream is both entertaining and unsettling. It's funny because he didn't realize how widespread the bot problem was on social media and it takes him forever to realize that these videos are AI generated, but it is eerie. If anyone actually decides to watch this, 26:45 is where it gets interesting. Strange times.

On top of the nobel prixe for physics goign to AI. We have:

British computer scientist Professor Demis Hassabis has won a share of the Nobel Prize for Chemistry for "revolutionary" work on proteins, the building blocks of life.

Prof Hassabis, 48, co-founded the artificial intelligence (AI) company that became Google DeepMind.

This is a fun one. Study to see if AI heped doctors improve diagnosis.

It didn 't but the ai on its own outperformed them.

Importance Large language models (LLMs) have shown promise in their performance on both multiple-choice and open-ended medical reasoning examinations, but it remains unknown whether the use of such tools improves physician diagnostic reasoning.

Objective To assess the effect of an LLM on physicians’ diagnostic reasoning compared with conventional resources.

Design, Setting, and Participants A single-blind randomized clinical trial was conducted from November 29 to December 29, 2023. Using remote video conferencing and in-person participation across multiple academic medical institutions, physicians with training in family medicine, internal medicine, or emergency medicine were recruited.

Intervention Participants were randomized to either access the LLM in addition to conventional diagnostic resources or conventional resources only, stratified by career stage. Participants were allocated 60 minutes to review up to 6 clinical vignettes.

Main Outcomes and Measures The primary outcome was performance on a standardized rubric of diagnostic performance based on differential diagnosis accuracy, appropriateness of supporting and opposing factors, and next diagnostic evaluation steps, validated and graded via blinded expert consensus. Secondary outcomes included time spent per case (in seconds) and final diagnosis accuracy. All analyses followed the intention-to-treat principle. A secondary exploratory analysis evaluated the standalone performance of the LLM by comparing the primary outcomes between the LLM alone group and the conventional resource group.

Results Fifty physicians (26 attendings, 24 residents; median years in practice, 3 [IQR, 2-8]) participated virtually as well as at 1 in-person site. The median diagnostic reasoning score per case was 76% (IQR, 66%-87%) for the LLM group and 74% (IQR, 63%-84%) for the conventional resources-only group, with an adjusted difference of 2 percentage points (95% CI, −4 to 8 percentage points; P = .60). The median time spent per case for the LLM group was 519 (IQR, 371-668) seconds, compared with 565 (IQR, 456-788) seconds for the conventional resources group, with a time difference of −82 (95% CI, −195 to 31; P = .20) seconds. The LLM alone scored 16 percentage points (95% CI, 2-30 percentage points; P = .03) higher than the conventional resources group.

Conclusions and Relevance In this trial, the availability of an LLM to physicians as a diagnostic aid did not significantly improve clinical reasoning compared with conventional resources. The LLM alone demonstrated higher performance than both physician groups, indicating the need for technology and workforce development to realize the potential of physician-artificial intelligence collaboration in clinical practice.

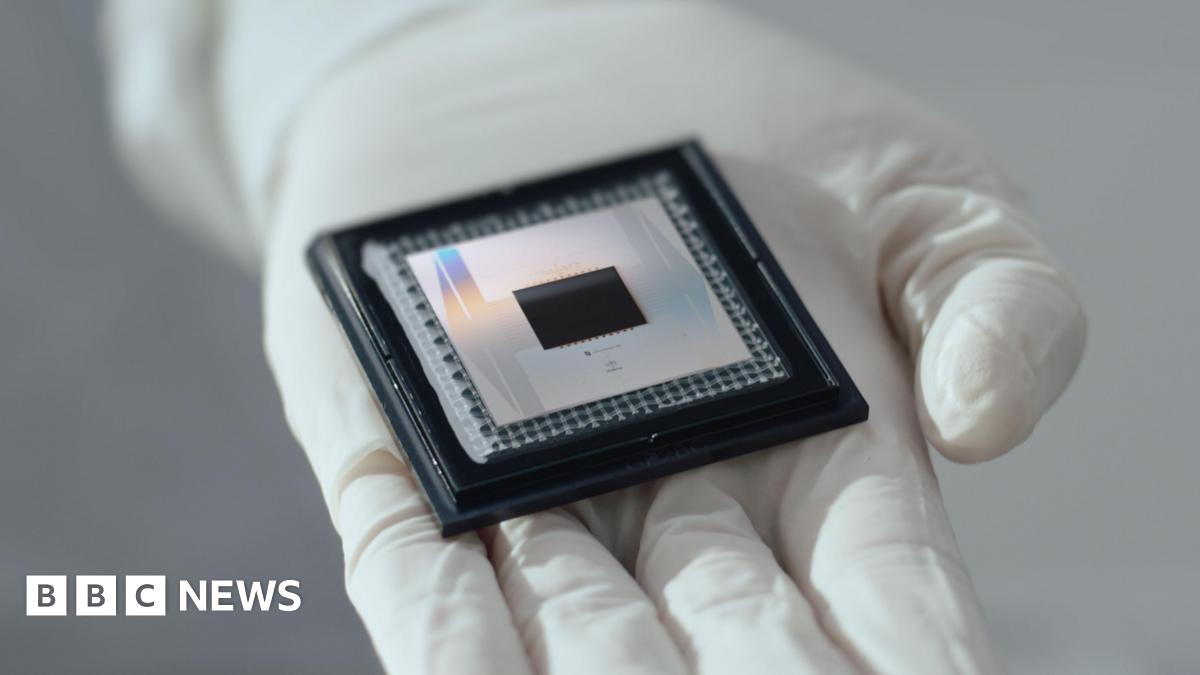

Google has unveiled a new chip which it claims takes five minutes to solve a problem that would currently take the world's fastest super computers ten septillion – or 10,000,000,000,000,000,000,000,000 years – to complete.

The chip is the latest development in a field known as quantum computing - which is attempting to use the principles of particle physics to create a new type of mind-bogglingly powerful computer.

Google says its new quantum chip, dubbed "Willow", incorporates key "breakthroughs" and "paves the way to a useful, large-scale quantum computer."

However experts say Willow is, for now, a largely experimental device, meaning a quantum computer powerful enough to solve a wide range of real-world problems is still years - and billions of dollars - away.

tick tock

As expected, significant advances are being made in the ability of AI to interpret moving images. This particular effort focuses on mimicking the way that organic brains interpret moving images:

"The brain doesn't just see still frames; it creates an ongoing visual narrative," says senior author Hollis Cline, PhD, the director of the Dorris Neuroscience Center and the Hahn Professor of Neuroscience at Scripps Research. "Static image recognition has come a long way, but the brain's capacity to process flowing scenes -- like watching a movie -- requires a much more sophisticated form of pattern recognition. By studying how neurons capture these sequences, we've been able to apply similar principles to AI."